The Kill Decision Problem: Why the Hardest Portfolio Choice Is the One Nobody Wants to Make

Fifty percent of kill decisions happen too late. The frameworks to fix this exist. The courage to use them is rare.

The Paradox Nobody Talks About

In January 2025, McKinsey published a striking finding from their analysis of top biopharma portfolio strategies: leading pharmaceutical companies discontinue 21-22% of their programs annually. That sounds like disciplined portfolio management. Until you read the next line.

Fifty percent of those terminations happen in Phase II or Phase III.

By that point, the company has already invested anywhere from $100 million to $500 million. The clinical teams have spent years on the program. The commercial organization has built forecasts around it. And somewhere, patients enrolled in trials that will never lead to an approved medicine.

The industry terminates programs. It just terminates them far too late, after the damage is done.

This is the paradox at the heart of pharmaceutical portfolio management: companies are making kill decisions, but they're making them when it no longer matters. The question isn't whether pharmaceutical companies can stop failing programs. They do, eventually. The question is why it takes so long. Why do programs that show warning signs in Phase I survive into Phase III? Why do portfolio reviews consistently uncover "zombie programs" that have been consuming resources for years past their expiration date?

The answer has less to do with science and more to do with organizational dynamics that make stopping a program one of the most difficult decisions any leader can make.

Consider the math. Approximately 90% of drugs that enter clinical trials will fail. The industry spends over $80 billion annually on R&D in the United States alone. The average cost to develop an approved drug now exceeds $3.5 billion, with failed programs consuming the lion's share of that investment. Every month a failing program continues, it absorbs budget, headcount, and leadership attention that could flow to programs with better odds.

Yet despite decades of awareness, despite countless articles and frameworks and consulting engagements, the pattern persists. Late-stage attrition remains stubbornly high. A 2024 analysis of 200 pharmaceutical firms and more than 80,000 clinical trials found that "despite recent stabilization, the pharmaceutical industry continues to face challenges, particularly due to elevated late-stage clinical attrition, suggesting that a sustained turnaround in R&D efficiency remains elusive."

The playbook for disciplined termination exists. It's been published in peer-reviewed journals. Companies that have implemented it have achieved remarkable results. So why hasn't the industry followed?

The answer begins with understanding why the kill decision is structurally, behaviorally, and politically the hardest choice a portfolio leader will ever face.

Three Forces That Make Stopping Nearly Impossible

The difficulty of kill decisions isn't a mystery. It's a predictable outcome of three forces that cascade and reinforce each other: cognitive biases that cloud judgment, governance structures that diffuse accountability, and career incentives that make silence the rational choice.

The first force is cognitive. A 2023 survey of 92 pharmaceutical R&D practitioners identified the biases that most frequently distort portfolio decisions: confirmation bias, champion bias, and misaligned incentives topped the list. Confirmation bias leads teams to weight positive signals heavily while explaining away warning signs. Champion bias means the most passionate advocate for a program often has the loudest voice in the room, regardless of the data. And misaligned incentives ensure that the people closest to a program, those who would be most affected by its termination, are often the ones presenting the evidence.

None of this is malicious. Scientists believe in their work. They should. The same conviction that drives someone to spend years on a difficult problem also makes it hard to accept that the problem might be unsolvable, or that the solution won't work in patients. The bias isn't a character flaw. It's human nature operating exactly as expected in an environment that doesn't account for it.

The second force is structural. Most pharmaceutical companies make portfolio decisions through committees. In theory, committees provide diverse perspectives and collective wisdom. In practice, they diffuse accountability. When a committee of twelve people approves a program's continuation, no single person owns that decision. If the program later fails, responsibility dissolves into "the committee decided." This makes it easier to say yes than to say no. Approving continuation feels like maintaining consensus. Advocating for termination means standing alone, challenging colleagues, potentially being wrong in a very visible way.

When everyone is responsible, no one is responsible. The governance structures designed to ensure rigor inadvertently create permission for inaction.

As we explored in Headcount Is Not Capability, the difference between suboptimal and superior outcomes often comes down to whether someone truly owns a decision or is merely assigned to it. Committee-based portfolio governance creates assignment without ownership.

The third force is personal. This is where the system truly locks in place. Consider the asymmetry facing anyone who might raise concerns about a program's viability.

If you flag a risk early and the team addresses it, you receive little credit. The problem was solved. It never became visible. If you flag a risk early and the program fails anyway, you're associated with the failure. You saw it coming. Why didn't you do more? And if you stay quiet and the program fails, you share the outcome with everyone else. Plausible deniability. The math favors silence.

Flag a risk early and fix it? No credit. Flag it and it happens anyway? You're blamed. Stay quiet and it blows up? Plausible deniability. The math favors silence.

Now add career stakes. The scientist who has spent five years on a program doesn't just face professional disappointment if it's terminated. They face reassignment, uncertainty, potentially a layoff if the company is tightening budgets. The project leader whose program gets killed doesn't get promoted for disciplined resource allocation. They get a smaller team and fewer opportunities. The executive who terminates a high-profile program doesn't get celebrated for courage. They get questioned about why they let it get so far.

The system punishes those who stop failing programs and protects those who let them continue. Not by design. But by the accumulation of individual incentives that make continuation the safer path.

These three forces, cognitive, structural, and personal, don't operate in isolation. They cascade. Biased data reaches a committee that has no individual accountability, which makes a decision that nobody will be blamed for, which allows a program to continue that someone suspected should stop but chose not to say. The next quarter, the pattern repeats.

This is why the kill decision is so hard. It requires someone to overcome their own biases, stand apart from the committee, accept personal career risk, and do it all while genuinely uncertain whether they're right. That's not a decision most organizational systems are designed to support.

The Root Cause: Who Decides, Who Benefits

Beneath the cognitive biases, the diffuse governance, and the career incentives lies a more fundamental problem. In most pharmaceutical organizations, the people who benefit most from a program's continuation are the same people who provide the evidence for continuation decisions.

This isn't a conspiracy. It's an organizational design that evolved naturally. Who knows the most about a program's science? The team working on it. Who understands the nuances of the data? The scientists who generated it. Who can speak to the competitive landscape and commercial potential? The people who have been tracking it for years. Of course these are the voices in the room when portfolio decisions get made.

But this creates an inherent conflict. The team presenting the data has careers, identities, and years of work tied to the program's survival. They aren't lying when they present optimistic interpretations. They believe them. Confirmation bias isn't about dishonesty. It's about the human tendency to see what we hope to see, especially when the stakes are high.

Confirmation bias isn't about dishonesty. It's about the human tendency to see what we hope to see, especially when the stakes are high.

A 2015 analysis in Nature Reviews Drug Discovery examined why it's so hard to terminate failing projects in pharmaceutical R&D. The authors identified a core dynamic: decision-makers often lack the objectivity to assess programs they're personally invested in, and organizations rarely create structures that compensate for this. The result is predictable. Programs continue past the point where an objective observer would have stopped them.

The solution seems obvious: separate the decision from the deciders. Bring in external reviewers. Create independent assessment bodies. Some companies have tried this. But implementation runs into the same organizational dynamics it's trying to solve. External reviewers lack context. Independent bodies slow down decisions. And the teams being evaluated experience the process as judgment rather than support.

The deeper issue is cultural. In most organizations, terminating a program feels like failure. The language gives it away. We talk about "killing" programs, about "pulling the plug," about projects "dying." This framing makes termination something to be avoided, delayed, mourned. It takes courage to be the person who delivers death.

But what if termination isn't failure? What if stopping a program that isn't working is one of the most valuable things a portfolio leader can do? Every resource tied up in a program past its useful life is a resource unavailable for a program that might succeed. Every scientist working on a molecule that will never reach patients is a scientist not working on one that could.

The companies that have solved this problem didn't just add new governance processes. They changed what termination means. They made stopping a program a sign of disciplined thinking, not defeat. They created environments where the person who raises the uncomfortable question is thanked, not blamed.

This is harder than it sounds. It requires leaders willing to reward behavior that feels counterintuitive. It requires systems that protect people who deliver bad news. It requires a culture that genuinely believes resources are better freed than hoarded.

Most organizations say they want this. Few have built it.

The Proof That It Can Be Done

If the barriers to disciplined kill decisions were insurmountable, we could accept the status quo as inevitable. But they're not. A small number of companies have implemented systematic approaches to portfolio discipline and achieved results that should make the rest of the industry take notice.

Pfizer's SOCA Framework

In the early 2010s, Pfizer faced the same productivity challenges as the rest of the industry. Their response was a systematic overhaul of how they evaluated and advanced programs, which they called the SOCA framework (Strategic, Operational, and Commercial Assessment). The results, published in Drug Discovery Today in 2022, were striking: success rates improved from approximately 2% to 21%, a tenfold increase.

What made SOCA work wasn't magic. It was rigor applied consistently. The framework established quantitative criteria for advancement that teams had to meet, not argue around. It created stage-gate decisions with real teeth. And critically, it shifted the burden of proof. Instead of requiring evidence to stop a program, it required evidence to continue one.

They didn't require evidence to stop a program. They required evidence to continue one.

Lilly's Chorus Model

Eli Lilly took a different approach with a similar philosophy. Their Chorus model, described in Nature Reviews Drug Discovery in 2015, created a separate organization focused specifically on early development. The goal was to test hypotheses quickly and cheaply, terminating programs that didn't meet predefined criteria before significant investment accumulated.

The results were remarkable. Phase II success rates improved from 29% to 54%, an 86% relative improvement. Overall productivity increased three to tenfold compared to traditional approaches. Programs that would have lingered for years were resolved in months. Resources flowed to the programs that earned them.

The key insight from Chorus was structural separation. By creating a distinct organization with different incentives, Lilly insulated early decisions from the political dynamics that plague portfolio management in larger organizations. The Chorus team's success was measured by learning velocity, not program survival.

AstraZeneca's 5R Framework

Perhaps the most thoroughly documented transformation came from AstraZeneca. In 2018, Nature Reviews Drug Discovery published their analysis of the 5R framework (Right target, Right tissue, Right safety, Right patient, Right commercial potential). The numbers told the story: project success rates improved from 4% to 23%, approaching sixfold improvement.

But the 5R paper revealed something beyond process. It described a cultural transformation toward what the authors called a "truth-seeking culture." This meant creating an environment where scientists were rewarded for finding reasons a program wouldn't work, not punished for it. It meant celebrating disciplined termination as an act of scientific integrity, not organizational failure. AstraZeneca's transformation wasn't just about process. It was about building a culture where scientists were rewarded for finding reasons a program wouldn't work.

The Common Thread

These three examples span different companies, different therapeutic areas, and different organizational structures. But they share remarkable similarities in what made them work.

First, prespecified quantitative criteria. All three frameworks established clear, measurable thresholds before programs began, not after data emerged. This removed the temptation to move goalposts when results disappointed.

Second, external or independent review. Whether through separate organizations like Chorus or rigorous stage-gates like SOCA, all three created mechanisms to separate decision-makers from program advocates.

Third, cultural permission to stop. Each company invested in changing what termination meant. Stopping a program wasn't failure. It was the system working as intended.

Fourth, protection for truth-tellers. The person who raised the uncomfortable question, who pointed to the data suggesting a program should stop, was recognized rather than marginalized.

The playbook exists. It's been published in peer-reviewed journals. The results are documented. The question is why so few companies have followed.

The Questions That Matter

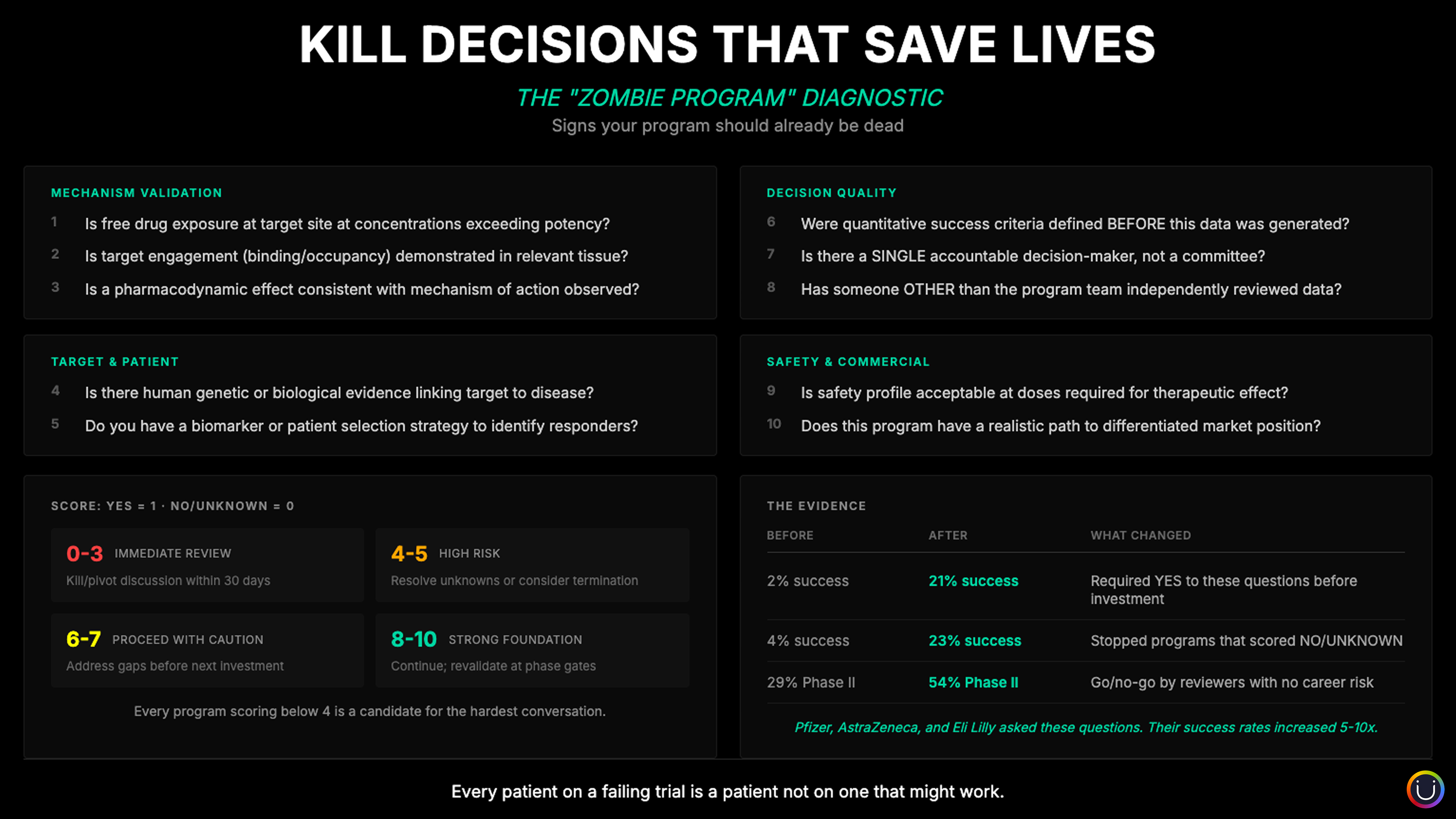

What did Pfizer, AstraZeneca, and Lilly actually change? Beyond the frameworks and the cultural transformation, they institutionalized a set of questions that most organizations avoid asking, or ask too late.

When Pfizer analyzed 44 Phase II programs, they found that 43% couldn't even determine whether the mechanism had been adequately tested. The problem wasn't failed science. It was unanswered questions. They identified three fundamental requirements, which they called the "Three Pillars": Has the drug reached the target site at sufficient concentration? Has it bound to the intended target? Has it produced the expected pharmacological effect? When none of these could be demonstrated, 100% of programs failed. When all three were evident, 86% successfully tested their mechanism.

AstraZeneca's 5R framework asked different but complementary questions: Is there human evidence linking this target to disease? Can we identify patients who will respond? Is the safety profile acceptable at therapeutic doses? Does a viable commercial path exist? Programs that couldn't answer these questions with confidence were stopped before significant investment accumulated.

Lilly's Chorus model added a structural question: Has someone independent of the program team reviewed this data? By separating advocates from evaluators, Chorus resolved programs in months that would have lingered for years under traditional governance.

These questions share a common characteristic: they're binary. The answer is yes, no, or unknown. And "unknown" is treated the same as "no" when making investment decisions. This is the discipline that separated the companies that transformed from those that didn't.

The questions fall into four categories:

Mechanism validation. Is the drug reaching, binding, and modulating the target? Without this evidence, you're not testing your hypothesis. You're hoping.

Target and patient. Is there human genetic or biological evidence for this target? Can you identify which patients will respond? Without patient selection, you're running an expensive lottery.

Decision quality. Were success criteria defined before the data existed? Is there a single accountable decision-maker? Has someone outside the program team reviewed the evidence? Without these safeguards, confirmation bias operates unchecked.

Safety and commercial viability. Is the safety profile acceptable at efficacious doses? Does differentiated market position exist? Without these, even scientific success leads nowhere.

The uncomfortable truth is that most portfolio reviews don't systematically ask these questions. And when they're asked, "unknown" is often treated as permission to continue rather than a signal to stop.

The companies that transformed their productivity didn't discover new science. They discovered new discipline: the discipline to ask hard questions early, to treat uncertainty as risk rather than opportunity, and to stop programs that couldn't answer the questions that predict success.

Why Most Haven't Followed

The frameworks from Pfizer, Lilly, and AstraZeneca weren't developed in secret. They were published in leading journals, presented at conferences, discussed in industry forums. The playbook for disciplined portfolio decisions has been available for over a decade. Yet a 2024 analysis of 200 pharmaceutical firms found that industry-wide R&D productivity remains essentially flat.

If the playbook works and the playbook is public, what explains the gap?

The uncomfortable answer is that implementing these frameworks requires something most organizations find extraordinarily difficult: changing the relationship between decisions, accountability, and career consequences.

Consider what Pfizer, Lilly, and AstraZeneca actually did. They didn't just add a new checklist or governance committee. They restructured how decisions get made. They created mechanisms that separate program advocates from program evaluators. They built cultures where stopping a program is celebrated, not mourned. They protected the people who deliver uncomfortable truths.

Each of these changes threatens something. Separating advocates from evaluators reduces the influence of program teams. Celebrating termination challenges deeply held beliefs about what success looks like. Protecting truth-tellers means accepting that leadership might hear things they'd rather not hear.

McKinsey's research on organizational transformation puts numbers to this challenge. Seventy percent of transformation initiatives fail. When asked why, 72% of respondents point to employee resistance and lack of management support. The barrier isn't designing the new process. It's getting people to actually use it when the new process produces uncomfortable outcomes.

In pharmaceutical R&D specifically, McKinsey found that 83% of organizations lack sufficient change management skills, and only 7% of employees feel that senior executives use effective change processes, compared to 15% across other industries. The industry that prides itself on scientific rigor struggles more than most with the human dynamics of organizational change.

A 2022 McKinsey analysis of biopharma R&D transformation was even more direct. They identified "deep-rooted 'old guard' narratives" as a primary barrier to adopting new ways of working. The resistance isn't irrational. People who built careers under the old system have legitimate concerns about their place in the new one.

This is the courage gap. Not a gap in knowledge, but a gap in willingness to implement what the knowledge demands.

The playbook requires leaders to build systems that might surface problems they'd rather not see, protect people who might challenge their decisions, and redefine success in ways that some colleagues will resist.

It's easy to understand why most companies haven't done this. It's hard. It's uncomfortable. It creates organizational friction in the short term, even if it creates value in the long term. Leaders who attempt it risk resistance from their own teams, skepticism from their boards, and the possibility that they might be wrong.

But understanding why it's hard doesn't make the cost of inaction any less real.

The Cost That Doesn't Appear on Dashboards

Every program that continues past the point where it should have stopped consumes resources that could go elsewhere. Budget. Headcount. Laboratory capacity. Leadership attention. These are finite. When they flow to a program that will never reach patients, they don't flow to one that might.

This is the cost that rarely appears on portfolio dashboards. We track the investment in programs that fail. We calculate the sunk costs. But we rarely quantify the opportunity cost: the programs that moved slower, received less attention, or never started at all because resources were tied up in programs that should have been stopped months or years earlier.

For the scientists working on those under-resourced programs, the cost is professional. Their work advances more slowly. Their programs compete for attention against programs with more momentum, regardless of merit.

For the company, the cost is competitive. While resources churn through programs that will never succeed, competitors with more disciplined portfolios move faster. First-mover advantages in therapeutic areas go to organizations that concentrate resources on their best opportunities. As we explored in The $25B AI Paradox, risk asymmetry shapes organizational behavior in ways that perpetuate the status quo, even when leaders know better approaches exist.

But the cost that matters most doesn't show up in quarterly reports or portfolio reviews.

Somewhere, a patient is waiting for a medicine that doesn't exist yet. Maybe it's a child with a rare disease whose parents have exhausted every available option. Maybe it's an adult with a cancer that doesn't respond to current treatments. Maybe it's someone whose condition isn't life-threatening but erodes their quality of life every day.

These patients don't experience "late-stage attrition" or "suboptimal resource allocation." They experience delay. They experience hope deferred. In some cases, they experience a medicine that arrived too late, or never arrived at all.

Every resource tied to a program that will never reach patients is a resource stolen from one that might.

This isn't meant to assign blame. The people working in pharmaceutical R&D are trying hard. Scientists pour years of their lives into programs because they believe those programs might help patients. Portfolio managers wrestle with impossible tradeoffs and incomplete information. Executives balance fiduciary duties against long-term mission. Everyone in the system is doing their best within the constraints they face.

But the constraints can be changed. The companies that implemented disciplined kill frameworks didn't do it because their people were smarter or more virtuous. They did it because their leaders made structural changes that aligned individual incentives with collective outcomes. They made it safe to stop programs that weren't working. They made it honorable to free resources for programs that might.

The question for every portfolio leader isn't whether disciplined kill decisions are possible. The evidence says they are. Pfizer, Lilly, and AstraZeneca demonstrated what's achievable when organizations commit to the structural and cultural changes required.

The question is whether your organization is willing to build the systems that make those decisions possible. Whether leaders will create environments where raising concerns is rewarded rather than punished. Whether governance structures will assign clear accountability rather than diffusing it across committees. Whether the culture will celebrate disciplined termination as an act of strategic clarity rather than mourning it as failure.

The playbook is published. The evidence is clear. What remains is finding the organizational courage to implement it, so that the medicines that can reach patients actually do.

The kill decision isn't just a portfolio management problem. It's a test of whether organizations can align their structures with their stated mission. The companies that pass that test will deliver more medicines to more patients. The ones that don't will continue to wonder why their productivity remains stubbornly flat, despite everyone trying so hard.

The hardest decision in portfolio management isn't which program to fund. It's finding the courage to stop the ones that won't get there, so the ones that might actually can.

References

- McKinsey & Company (January 2025). "How biopharmaceutical leaders optimize their portfolio strategies."

- Hutter, C. et al. (September 2024). "The pharmaceutical productivity gap: R&D returns, therapeutic focus and portfolio strategy." Drug Discovery Today.

- Bieske, L. et al. (2023). "Cognitive biases in pharmaceutical R&D portfolio management decisions." Drug Discovery Today.

- Fernando, K. et al. (2022). "Achieving end-to-end success in the clinic: Pfizer's learnings on R&D productivity." Drug Discovery Today.

- Morgan, P. et al. (2018). "Impact of a five-dimensional framework on R&D productivity at AstraZeneca." Nature Reviews Drug Discovery.

- Owens, P.K. et al. (2015). "A decade of innovation in pharmaceutical R&D: the Chorus model." Nature Reviews Drug Discovery.

- Peck, R.W. et al. (2015). "Why is it hard to terminate failing projects in pharmaceutical R&D?" Nature Reviews Drug Discovery.

- Morgan, P. et al. (2012). "Can the flow of medicines be improved?" Drug Discovery Today.

- McKinsey & Company (2022). "Transforming biopharma R&D at scale."

- McKinsey & Company. "Organizations do not change. People change!"

- McKinsey & Company. "Confronting change fatigue in the pharmaceutical industry."

Further Reading

- Headcount Is Not Capability: Why Pharma Keeps Hiring and Firing Its Way to the Same Problem. On why adding resources doesn't solve structural problems.

- The Coordination Fallacy: Two Realities in Drug Development. On the gap between what leadership believes is happening and what program teams actually experience.

- The $25B AI Paradox: Why Pharma is Missing Its Biggest Opportunity. On risk asymmetry: why organizations avoid operational transformation despite knowing it's needed.

- Four Companies Wearing One Logo: The Architecture of Disconnection. On functional fragmentation and why coordination is the missing layer.

- Multibillion Dollar Portfolio Decisions: When Guesstimates Aren’t Enough. On portfolio decision frameworks and the scale of investment at stake.

About Me

I'm Andy, pharmacist, MIT engineer, 25 years in life sciences operations. I started Unipr because I watched too many medicines get delayed by coordination failures, not science failures. We support 100+ pipeline programs at top pharma and biotech across 30+ countries.

This article is why we built Optimizer: an AI agent that helps portfolio leaders evaluate tradeoffs, compare scenarios, and make resource decisions with clarity. Because the hardest decisions shouldn't also be the loneliest. Learn more at unipr.com/agents/optimizer.

Compliance

Unipr is built on trust, privacy, and enterprise-grade compliance. We never train our models on your data.

Start Building Today

Log in or create a free account to scope, build, map, compare, and enrich your projects with Planner.