Headcount Is Not Capability: Why Pharma Keeps Hiring and Firing Its Way to the Same Problem

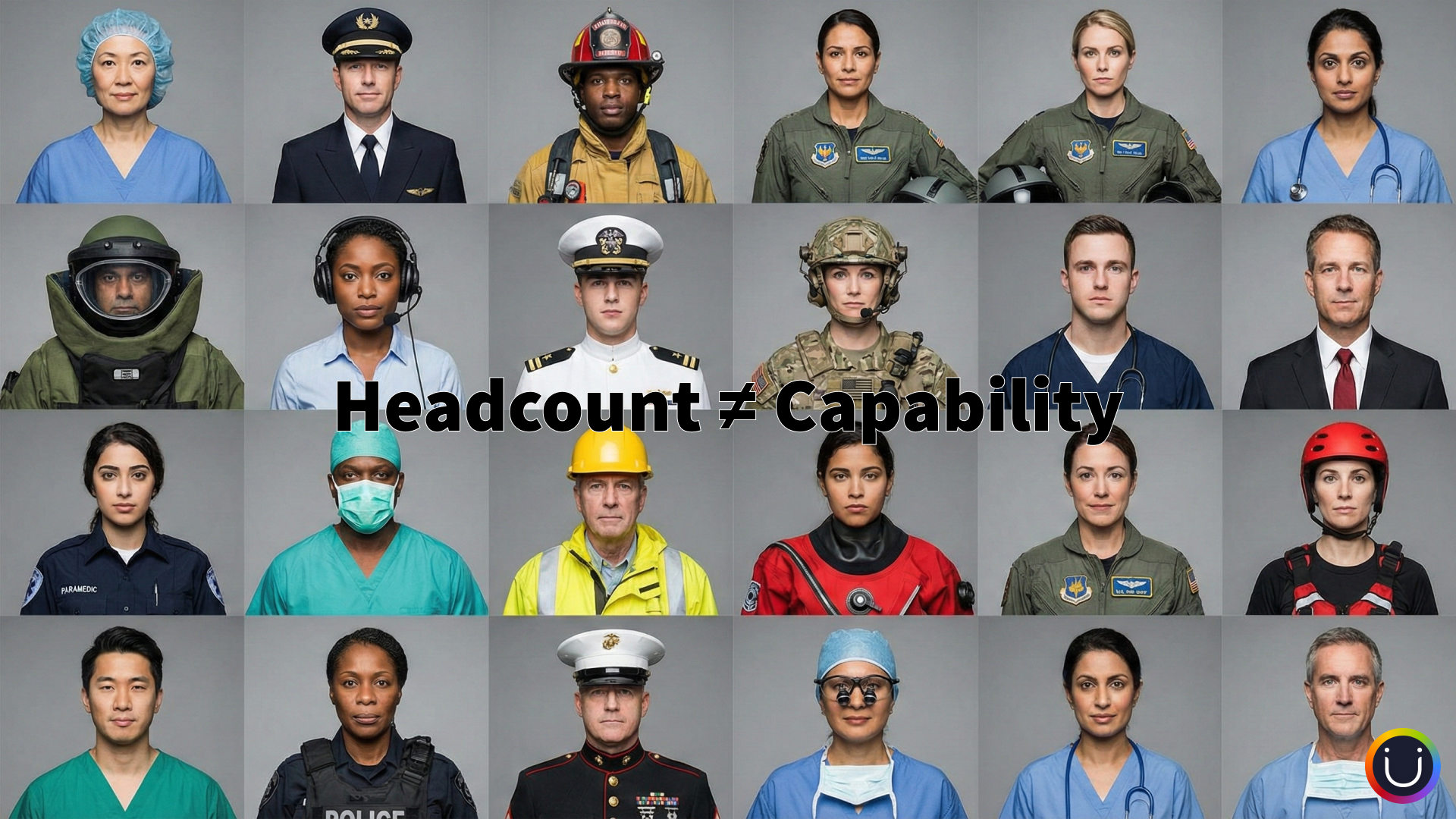

When organizations plan by headcount rather than capability, they create accountability gaps that governance cannot solve.

Thirty-nine thousand jobs. That's how many positions just six Big Pharma companies could eliminate based on announced restructuring targets through late 2025: Novo Nordisk, Johnson & Johnson, Roche, Novartis, Pfizer, and Bayer. Add the broader industry, and BioSpace tracking shows 42,700 employees affected last year alone, a 47% increase over 2024.

The conventional explanation frames this as product lifecycle management: patent cliffs approach, pipelines shift, organizations rightsize. Reasonable enough on the surface.

But look closer and paradoxes emerge.

Novo Nordisk nearly doubled its workforce from 43,000 to 78,400 employees between 2019 and 2024, riding the GLP-1 wave, only to announce 11% cuts in 2025 while simultaneously facing capacity constraints that limit their ability to meet demand. Merck announced roughly 6,000 job cuts even as it prepares to launch more than 20 products in its near-term pipeline. Across the industry, 36% of biopharma leaders cite talent shortage as their primary workforce challenge, according to AMS research, while simultaneously executing reductions.

These aren't simply the mechanics of portfolio adjustment. They reveal something deeper: a philosophical gap in how life sciences organizations think about human resources.

When you hire by headcount and cut by headcount, you optimize for a variable (the number of people) without examining the more consequential variable: the match between specific expertise and specific challenges. You can add 35,000 employees in five years and still lack the particular capabilities your pipeline requires. You can cut 6,000 employees and inadvertently eliminate the exact expertise your launches demand.

The result is a workforce that expands and contracts like an accordion, with organizations perpetually surprised that productivity doesn't scale with headcount. McKinsey's analysis of R&D productivity shows it has been essentially flat since 2012, despite massive investments in talent, technology, and process improvement.

The volatility isn't primarily about product cycles. It's about a resource allocation philosophy that treats humans as interchangeable units, and the downstream consequences that philosophy creates.

The Unexamined Hiring Hypothesis: Are All Humans in Similar Roles Equally Skilled at Them?

How do companies decide who to hire for what?

The answer reveals a spectrum of maturity that most organizations haven't examined explicitly.

Level 1: Top-Down Allocation. This is where the majority of the industry operates. Planning teams use historical patterns to forecast future needs. If a Phase III oncology program required 12 regulatory FTEs last time, the model assumes 12 regulatory FTEs this time. The approach is fast, consistent, and algorithm-friendly. It also embeds a fatal assumption: that the future will resemble the past, and that all regulatory FTEs are equivalent.

At Level 1, no one examines the actual work to be done. No one owns the estimation. When resources prove inadequate, accountability dissolves into "we followed the model."

Level 2: Bottom-Up Calculation. More sophisticated organizations start from the work itself. They build a work breakdown structure, scope tasks to milestones, and calculate role requirements based on actual deliverables. This is more rigorous, requires genuine planning discipline, and produces fewer surprises. It's where sophisticated companies aspire to operate.

And yet both Level 1 and Level 2 have a fundamental flaw that rarely gets named.

Level 3: Expertise-Based Planning. This is where the philosophy changes. Instead of asking "how many people do we need?" Level 3 asks "which specific person is best suited to this specific challenge?"

The shift sounds subtle. It's not.

When you match expertise to challenge, you're not just filling a role. You're assigning ownership. The person who has navigated this exact complexity before doesn't need supervision. They don't need a committee validating their judgment. They can own the outcome, because they've earned the right to own it through demonstrated capability.

This is the realistic, scientific approach: each piece of work gets assigned to the individual best suited to do justice to it. Not the available person. Not the person whose utilization rate needs balancing. The person whose specific experience matches the specific challenge.

A "Regulatory Writer" is no longer just any "Regulatory Writer." A "CMC Lead" is no longer whoever happens to be open. Planning systems stop slotting people into roles as if they were standardized components. Each work assignment becomes a deliberate match, backed by data about experience and expertise, not by gut feel or familiarity.

Consider the pilot analogy. Autopilot handles cruising altitude. Co-pilots manage routine flight segments. But captains do takeoffs and landings. Why? Because not all phases of flight carry the same consequence, and not all pilots have equivalent expertise for high-stakes moments. Airlines don't say "we have adequate pilots" and randomly assign them to flights. They match specific expertise to specific challenges.

The difference between suboptimal execution and superior outcomes often comes down to this single variable: did the work have a true owner, or merely an assignee?

But most organizations don't operate at Level 3. When a resource plan assigns roles without assessing whether the specific person has handled this specific complexity before, capability and challenge become mismatched.

The result is predictable: when you can't trust that the person assigned has the expertise to handle the specific challenge, you compensate with oversight.

The Architecture of Distrust: How Mismatched Assignments Create Accountability Gaps

When you treat humans as interchangeable units, you can't fully trust that the person assigned will handle the specific challenge. Not because they're incompetent, but because you have no basis for confidence. You haven't matched capability to consequence. You've matched availability to task.

So what do you do? You build oversight. You create committees. You add review layers. You require consensus before decisions move forward.

Consider the contrast: a CEO doesn't need a 12-person steering committee for every decision. Why? Because the selection process ensured that the person in the role has the expertise, judgment, and accountability to decide. We trust the match between the individual and the weight of the office.

The same principle applies at every level of work. Each task essentially needs its own CEO: a work owner who deserves the assignment, takes pride in it, and executes it to the best of their ability. When every piece of work gets assigned to the person best suited for it, operational excellence flows naturally. You get superior outcomes without heroic effort. The oversight becomes unnecessary because the match was right from the start.

But when a resource plan assigns roles without assessing whether the specific person has handled this specific complexity before, doubt is already seeded. Not overt doubt, not conscious doubt, but structural doubt. The system doesn't know whether to trust the output. So it adds reviewers. It adds approval layers. It creates governance structures that are really just institutionalized distrust.

The pattern plays out like this:

Treat humans as interchangeable units. This makes it impossible to trust that assigned people will handle specific challenges. So you add oversight, committees, and review layers. Decision authority diffuses across many people. Consensus becomes required. Decisions slow, stall, or default to the safest option. Bold moves that could accelerate programs don't happen. And patients wait.

This explains why the industry has invested decades in resource visibility, forecasting algorithms, and planning tools, yet R&D productivity has been essentially flat since 2012. The tools aren't the problem. The philosophy embedded in the tools is the problem.

Visibility without trust creates surveillance, not true coordination.

When you don't trust that the right person is matched to the right challenge, you compensate with monitoring. But monitoring isn't coordination. Dashboards that track activity don't move programs forward. Status reports that flag variances don't resolve the underlying capability gaps.

You can see everything and still be unable to act decisively, because the people who could act don't have the expertise to bear the risk, and the people who have the expertise don't have the authority to decide.

McKinsey's January 2025 analysis of biopharma operating models names this dynamic directly: committees with overlapping decision rights, unclear accountability, and decision-making that has become "slow and bureaucratic." Their research finds that only 20% of biopharma leaders believe their operating model enables timely cross-functional decision-making.

The conventional response is to mandate clearer accountability. Define RACI matrices. Clarify decision rights. Streamline governance.

But these interventions treat the symptom, not the cause. You cannot mandate trust into existence. You cannot governance-engineer your way to confidence in assigned resources. If the underlying matching problem remains, if traditional planning continues to treat humans as fungible, the distrust that spawns committee bloat will simply reassert itself in new forms.

The accountability gap isn't a governance problem you can restructure away. It's a downstream consequence of how you match people to work in the first place.

The Manufacturing Mindset: We Are Still Living in the Shadows of Our Past

The philosophical roots trace back to manufacturing. Frederick Taylor's scientific management, born in early twentieth-century factories, optimized for standardization and repeatability. Workers were interchangeable by design. The system's intelligence lived in the process, not the person. You could swap one trained operator for another without consequence because the task was engineered to eliminate variation.

This mindset migrated into workforce planning. Roles became units. Headcount became the measure. Planning systems evolved to forecast demand, allocate capacity, and balance utilization, all treating "a Regulatory Writer" or "a CMC Lead" as standardized inputs to a production function.

For routine work, this works well enough. When the task is repeatable, when the deliverable is standardized, when the risk of failure is bounded, interchangeability is efficient. You don't need a captain for cruising altitude.

But drug development is not a factory. Every program carries unique scientific risk. Every regulatory pathway has specific complexity. Every manufacturing scale-up encounters novel challenges. The work that determines whether a medicine reaches patients is precisely the work where expertise matching matters most. And it is where the manufacturing mindset fails most consequentially.

The uncomfortable truth is that this failure implicates everyone.

It implicates the planning systems that reduce humans to FTEs and roles. It implicates the leaders who accept headcount forecasts without asking whether the right expertise exists within that headcount. It implicates the HR systems that track skills as checkboxes rather than as nuanced capability profiles. It implicates the project managers who staff from availability rather than from challenge-to-capability fit. And it implicates the culture that has normalized "we had adequate resources" as a defense when programs fail, without ever examining whether the resources were adequate for the specific challenge at hand.

No one is solely at fault. The system emerged from reasonable decisions, each sensible in isolation. Top-down planning is fast and consistent. Bottom-up planning is rigorous and defensible. Both create the appearance of discipline. Both generate artifacts that satisfy governance requirements.

But both embed the same fatal assumption: that humans in the same role are interchangeable for planning purposes. And that assumption, invisible in the planning methodology, surfaces visibly in the operating model as committees, review layers, and the diffusion of accountability that everyone complains about but no one traces back to its origin.

The hiring and firing cycles are not the disease. They're the fever. The disease is a resource allocation philosophy that cannot distinguish between having headcount and having capability.

The Compounding Cost: Consequences for Patients and Business

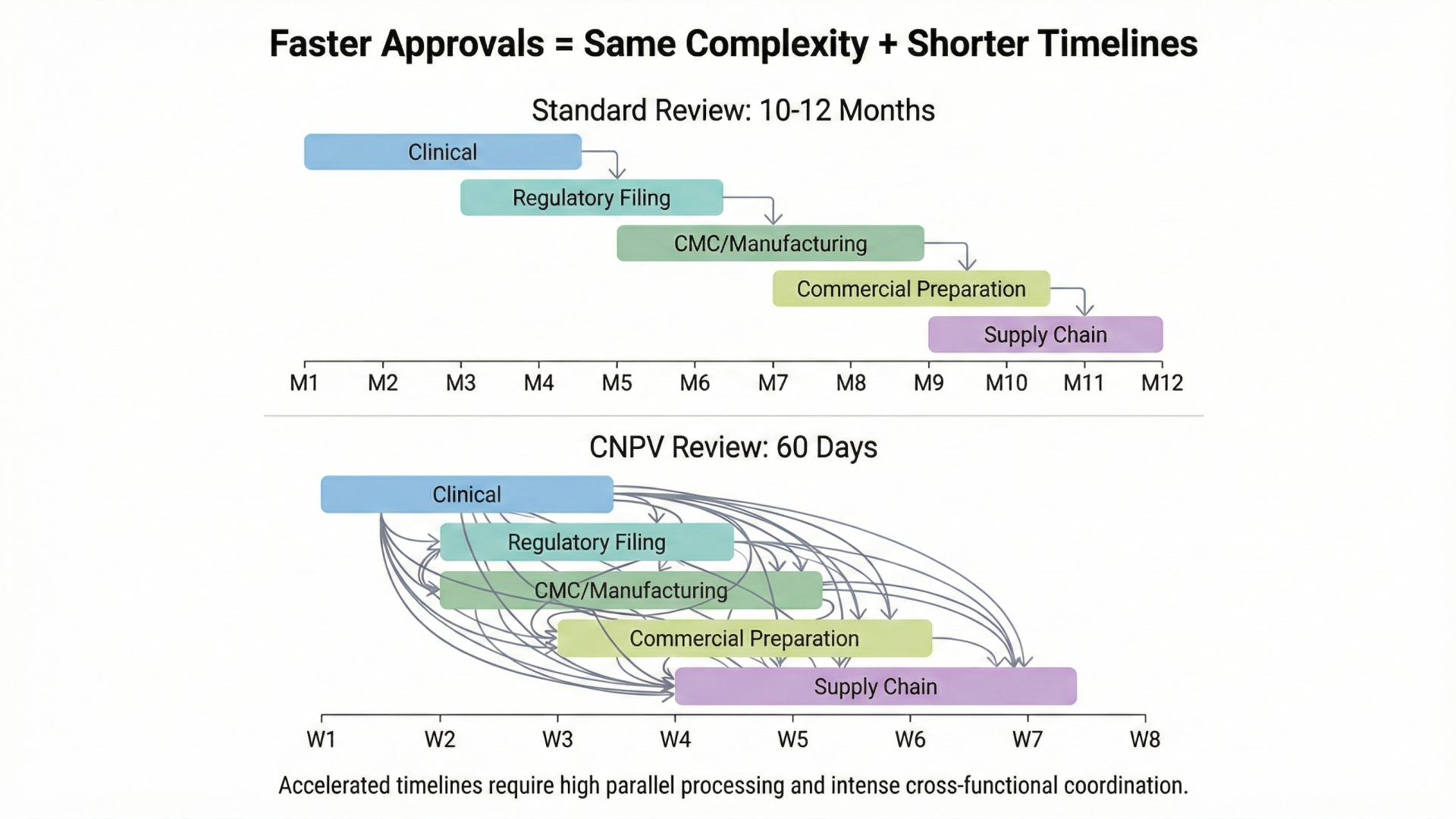

When committees multiply because trust in assigned resources is absent, decisions slow. When decisions slow, timelines extend. When timelines extend, patients wait.

The math is unforgiving. Tufts CSDD data shows that a single day of delay costs approximately $500,000 to $800,000 in unrealized prescription drug sales for marketed therapies. Phase III trials burn roughly $55,000 per day in direct costs. But these figures, as staggering as they are, capture only the financial dimension.

For a patient with metastatic cancer, a delay measured in months isn't an abstraction. Treatment delays as short as four weeks can increase cancer-related mortality. Every committee meeting that ends without a decision, every review cycle that adds weeks to a timeline, every governance layer that exists because the system doesn't trust its own resource assignments. These translate into patients who don't receive therapies that could extend or improve their lives.

The business consequences cascade as well. When decision-making is slow and bureaucratic, organizations lose competitive positioning. First-mover advantages evaporate. Launch windows narrow or close entirely. The 36% of biopharma leaders citing talent shortage as their primary challenge aren't wrong about the symptom, but they're often treating it with the same methodology that created it: hiring more headcount without solving the matching problem, then cutting headcount when pipelines shift, destroying institutional knowledge in the process.

This is the "firing for hiring" trap. Organizations eliminate experienced people during downturns, then discover they need to rebuild expertise during upturns. But expertise isn't fungible. The regulatory writer who navigated a complex FDA interaction last year carries knowledge that doesn't transfer automatically to a new hire, regardless of how similar their role titles appear.

McKinsey's analysis of biopharma operating models estimates that addressing these structural inefficiencies could improve speed to market by 15-30%. That's not optimization at the margins. That's months or years of patient access, billions in revenue, recovered through a philosophical shift in how organizations match people to work.

The Philosophical Shift: From Fungibility to Human Ingenuity

Expertise-based planning means abandoning the assumption that humans in the same role are interchangeable for planning purposes. It means building systems and practices that match specific expertise to specific challenges: not just roles to tasks or headcount to forecasts.

This is not about creating elaborate skill taxonomies or installing another HR technology platform. It's about changing the question that resource planning answers.

Level 1 asks: Based on historical patterns, how many FTEs do we need?

Level 2 asks: Based on the work breakdown, what roles do we need and when?

Level 3 asks: Given the specific challenges this program will face, which specific people have the expertise to navigate them? And do we have access to those people, at that time?

The difference is profound. Expertise-based planning treats resource allocation as a capability-matching problem, not a capacity-filling problem. It recognizes that a first-in-class regulatory submission requires different expertise than a lifecycle management filing, even if both are executed by people with "Regulatory Writer" in their title. It acknowledges that scaling a novel modality requires different experience than scaling a well-understood small molecule process, even if both fall under "CMC Lead."

When organizations make this shift, something else changes: the need for oversight diminishes.

If you trust that the person assigned to a high-stakes deliverable has navigated that specific complexity before, you don't need a committee to review their judgment. You can delegate with confidence. Decision authority can rest with the people closest to the work, because the matching process already ensured they have the expertise to bear the responsibility.

This is why accountability is not primarily a governance problem. It's a resource allocation problem. Fix the matching, and accountability emerges naturally.

The committees, the review layers, the diffused decision rights: these are compensating mechanisms for a matching problem that governance cannot solve.

This shift takes on new urgency in the age of AI.

The narrative that AI will eliminate jobs hasn't materialized, and history suggests it won't. Electricity didn't eliminate jobs. The internet didn't eliminate jobs. Technologies transform roles, create new categories of work, automate certain tasks. But the net effect has consistently been evolution, not elimination.

The 42,700 jobs lost across biopharma in the past year were not eliminated by AI. AI may serve as a convenient excuse, but it was not the cause. These cuts followed the same pipeline setbacks, patent cliffs, and resource planning logic that has driven hiring and firing cycles for decades.

But here is where AI becomes relevant to how we think about resource allocation:

If a job is defined by fungibility, by interchangeable tasks that any person in the role could perform, there is a reasonable chance it can be automated. But if a job is defined by capability, experience, expertise, and human ingenuity, no AI can truly replace it.

This is the crux. Organizations that treat humans as interchangeable units are, perhaps unknowingly, making the case for their own automation. If your planning system assumes any Regulatory Writer is equivalent to any other, you're defining the role in precisely the terms that make it AI-susceptible.

Some executives at this year's JPM conference predicted they could run their companies with less than half their historical headcount. If organizations continue planning with traditional approaches, treating expertise as fungible, that prediction may prove accurate. The fungible jobs will be automated. The oversight layers that exist because nobody trusts the assignments will be replaced by systems that don't require trust.

Organizations that shift to expertise-based planning, that define roles by human uniqueness rather than interchangeability, will discover their people become irreplaceable precisely because they're treated as irreplaceable.

The intervention isn't restructuring the org chart. It's rethinking how you answer the question: who should do this work?

From Principle to Practice: What Expertise-Based Planning Actually Looks Like

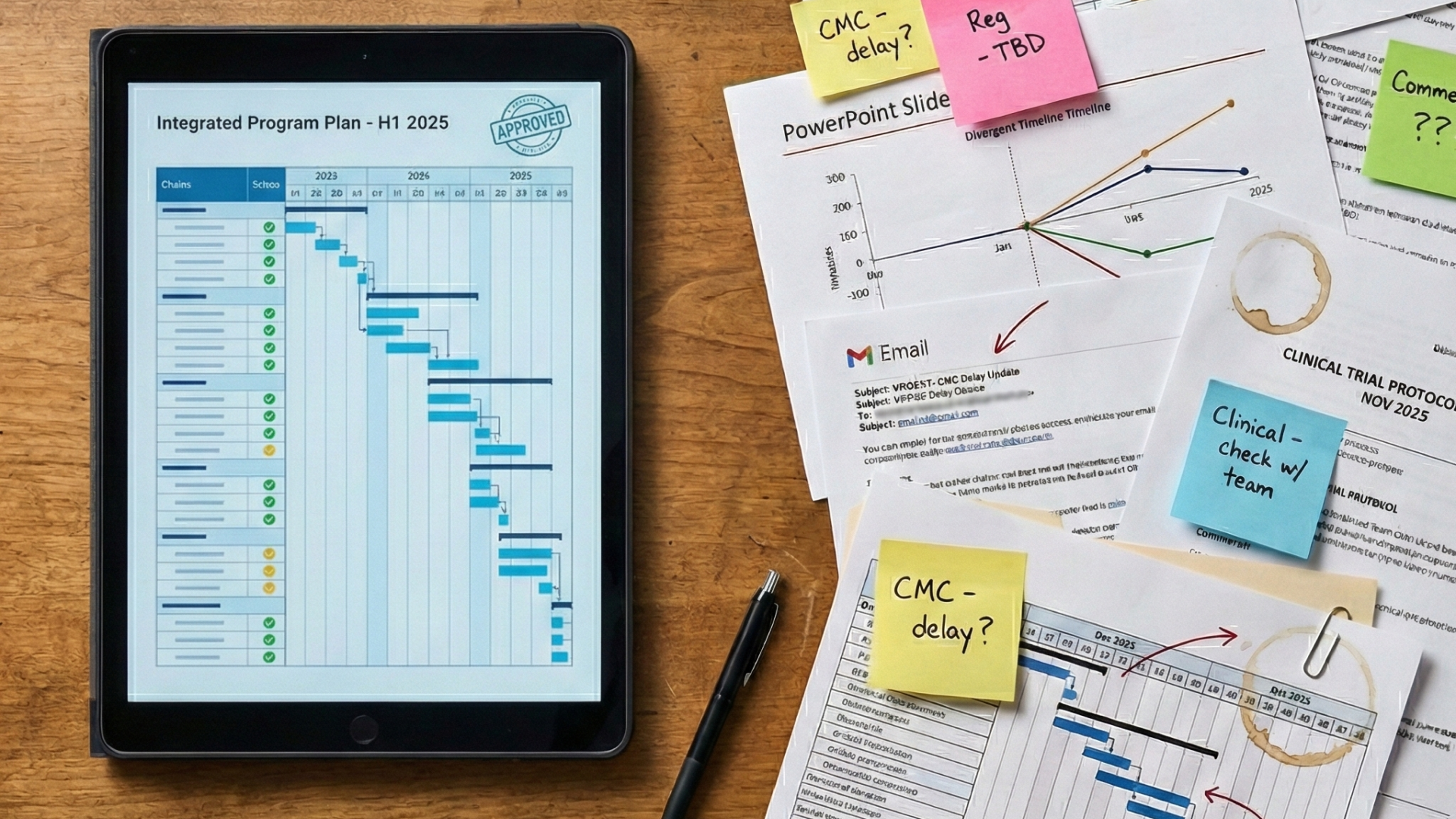

It begins with visibility into expertise, not just roles. Organizations already track who holds which title, who is allocated to which project, and who is available in which timeframe. Expertise-based planning requires a different kind of visibility: who has actually navigated which specific challenges, with what outcomes, under what circumstances.

This isn't about building a database of skills checkboxes. It's about understanding the texture of experience. A CMC lead who has scaled three monoclonal antibody processes has different expertise than one who has scaled one mRNA platform, even if both have identical titles and tenure. A regulatory writer who has navigated two complete response letters has different pattern recognition than one who has only worked on successful first-cycle approvals.

The information exists. It lives in project histories, in institutional memory, in the tacit knowledge that experienced leaders carry. The challenge is making it accessible at the moment of assignment, so that resource decisions can be made on the basis of expertise match rather than availability alone.

Technology can help, but only if it's built on the right philosophy. Planning systems designed around traditional assumptions will optimize for the wrong variables. They'll balance utilization rates, minimize bench time, and forecast headcount needs with increasing precision, all while perpetuating the fundamental matching problem that spawns committees downstream.

The organizations that make this shift will discover something counterintuitive: they may need fewer people, not more. When you match expertise to challenge, you don't need the oversight layers that exist to compensate for mismatched assignments. When you trust that the assigned person can handle the specific complexity, you don't need six reviewers validating their judgment. Decision cycles compress. Committees shrink. Programs accelerate.

This is the opportunity that Operations AI was built to address. Not another planning tool that treats humans as interchangeable FTEs, but an intelligence layer that helps organizations understand which specific expertise exists, where it resides, and how to match it to the challenges that will determine whether medicines reach patients on time.

The hiring and firing cycles will continue as long as organizations plan by headcount. The committees will proliferate as long as organizations can't trust their resource assignments. The accountability gaps will persist as long as governance is asked to solve a problem that originates in resource allocation.

The philosophical shift is available to any organization willing to ask a different question: not "how many people do we need?" but "which people do we need, for which challenges, and do we have them?"

The Question Only You Can Answer

Every organization in this industry knows the pattern. The hiring surges that don't produce proportional output. The layoffs that somehow eliminate the exact expertise the next program needs. The committees that exist because no one trusts that the assigned person can handle the complexity. The flat productivity curve that persists despite decades of investment.

We know the pattern. We've accepted it as the cost of doing business in a complex, regulated industry.

But acceptance has a price.

Every committee meeting that exists because we couldn't trust the assignment: that's time not spent advancing the program. Every bold decision that defaulted to the safe option because accountability was too diffused for the reward to be worth bearing the risk: that's a potential breakthrough that didn't happen. Every month added to a timeline because governance had to compensate for expertise matching failures: that's a patient who waited longer than they had to.

The question isn't whether this pattern exists. Everyone reading this has seen it.

The question is whether you'll be the one who breaks it.

Not through another restructuring. Not through another planning tool that optimizes the wrong variable. But through a different question asked at the moment of assignment: Is this the person whose specific expertise matches this specific challenge?

The organizations that ask this question will discover they've been solving the wrong problem. They weren't short on headcount. They weren't short on governance. They were short on trust: the kind of trust that only comes from knowing that the person assigned has earned the right to own the outcome.

One team, one program, one assignment at a time. Start by asking: who in your organization has actually navigated this exact complexity before? Not who is available. Not who has the right title. Who has the expertise this moment demands?

The answer might surprise you. It might be someone two levels down who handled a similar challenge three years ago. It might be someone in a different function whose experience transfers. It might be that you don't have this expertise at all, and now you know what you're actually hiring for.

But you won't know until you ask the question. And you won't ask the question until you accept that headcount was never the answer.

Forty-two thousand seven hundred people affected last year. The cycle will continue, unless it doesn't.

The choice isn't the industry's to make. It's yours.

References

- BioSpace. "Biopharma's Axed 47% More Employees Year-Over-Year." BioSpace, January 2025. biospace.com

- BioSpace. "Layoff Tracker: Biopharma Job Cuts." BioSpace, January 2025. biospace.com

- AMS. "Why Pharma and Life Sciences Need to Rethink Talent Attraction." AMS Research, 2024. weareams.com

- McKinsey & Company. "The 8 Ingredients to Biopharma R&D Productivity." McKinsey Life Sciences Practice, 2024. mckinsey.com

- McKinsey & Company. "Simplification for Success: Rewiring the Biopharma Operating Model." McKinsey & Company, January 2025. mckinsey.com

- McKinsey & Company. "Strengthening the R&D Operating Model for Pharmaceutical Companies." McKinsey & Company. mckinsey.com

- Tufts Center for the Study of Drug Development. "New Estimates of the Cost of a Delay Day in Biopharmaceutical Development." Tufts CSDD, 2024. pubmed.ncbi.nlm.nih.gov

- Hanna TP, et al. "Mortality due to cancer treatment delay: systematic review and meta-analysis." BMJ, 2020. pmc.ncbi.nlm.nih.gov

- Raphael MJ, et al. "Association of Treatment Delays With Survival in Patients With Cancer." JAMA Oncology, 2025. pmc.ncbi.nlm.nih.gov

Further Reading

- Four Companies Wearing One Logo: The Architecture of Disconnection — How functional silos create the structural foundation for resource fragmentation

- Why Connecting Data Isn't Enough: The Coordination Imperative — The difference between visibility and true coordination across organizational boundaries

- The $25B AI Paradox: Why Pharma Is Missing Its Biggest Opportunity — How AI investment flows to discovery while operations remain underserved

- Employee Productivity at Top Medicine Innovators — What revenue per employee reveals about operational efficiency across the industry

- Multibillion Dollar Portfolio Decisions: When Guesstimates Aren't Enough — Why resource allocation decisions deserve more rigor than historical patterns provide

Compliance

Unipr is built on trust, privacy, and enterprise-grade compliance. We never train our models on your data.

Start Building Today

Log in or create a free account to scope, build, map, compare, and enrich your projects with Planner.