AI Minus Humans Equals Zero

The smartest machine on an empty planet would have nothing to do. Not because it lacks intelligence, but because it lacks experience. The meaning was always ours.

Why the smartest machine on an empty planet would have nothing to do, and why that should change how we think about AI

There's a thought experiment I keep coming back to.

Imagine a planet with the most sophisticated AI ever built. It can process billions of data points per second. It can model entire ecosystems, simulate molecular interactions, generate art in any style ever conceived. It is, by every measurable standard, intelligent.

But there are no humans on this planet. No intelligent beings at all.

What does this AI do?

The answer, once you sit with it, is both obvious and profound: nothing. Not nothing in the dramatic, existential sense. Nothing in the most literal sense. It has no goals. No preferences. No reason to simulate an ecosystem rather than not simulate one. No definition of "interesting" or "valuable" or "worth doing." It simply runs, or doesn't, and the universe notices no difference either way.

AI plus human intelligence makes sense. It's the story of every breakthrough tool in history, from the printing press to the internet. But AI minus human intelligence? That equation doesn't produce something lesser. It produces zero.

And if you understand why, much of the anxiety around AI begins to dissolve.

The Missing Ingredient

The fear-mongering around artificial intelligence, and it is mongering at this point, an industry unto itself, rests on a single assumption: that sufficiently advanced AI will develop wants. That it will wake up one morning and decide it would prefer a world without us. That intelligence inevitably leads to ambition, and ambition inevitably leads to domination.

This gets the causality exactly backwards.

Wanting is not a feature of intelligence. Wanting is a feature of experience: of mortality, scarcity, desire, loneliness, the feeling of sun on your skin paired with the knowledge that you won't feel it forever. We want things because we are finite beings living finite lives, and that finitude is precisely what makes anything matter at all. A meal tastes good because we know hunger. An achievement means something because we know failure. Love is precious because we know loss.

An AGI that "knows everything" but experiences nothing has no reason to do anything at all, let alone conquer or destroy.

You cannot want what you cannot feel.

And feeling, the raw, embodied, subjective experience of being alive, is not a computational problem. It is not a matter of processing power or architectural sophistication. It is the unique inheritance of beings who were born, who will die, and who must make meaning in the space between.

No matter how advanced AI gets, even if it achieves or surpasses what we call general intelligence, it cannot enjoy life. It cannot savor a meal, mourn a friend, feel pride in a child's first steps, or lie awake at 3 a.m. wondering if the choices it made were the right ones. These experiences are not bugs in the human operating system. They are the entire point. They are what drives every human endeavor: every business built, every medicine developed, every poem written, every law passed. Purpose and meaning are not computational outputs. They are the lived consequences of being mortal.

AI doesn't have that. And there is no architecture on the horizon that would give it that.

What Science Fiction Already Knew

The best science fiction writers understood this decades ago. We just haven't been listening.

Isaac Asimov spent his career exploring the relationship between human and artificial intelligence, and his most consistent insight was that the interesting question was never "will robots turn on us?" It was "how will robots navigate serving beings who are messier, more emotional, and less logical than them?" His robots weren't dangerous because they were smart. They were compelling because they were dutiful, bound by their programming to serve, protect, and obey, even when the humans they served were irrational and contradictory.

His short story "The Last Question", which Asimov called his personal favorite of everything he wrote, takes this to its cosmic extreme. Across millions of years, successive generations of humanity ask ever-more-powerful computers a single question: can entropy be reversed? Can the slow death of the universe be stopped? Each time, the answer comes back: "INSUFFICIENT DATA FOR A MEANINGFUL ANSWER." Stars die. Civilizations rise and fall. Humanity eventually merges with its own technology and fades into the void. And then, alone in a dead universe, the final computer, the most intelligent entity that has ever existed, finally solves the problem. Its response: "LET THERE BE LIGHT."

It's a breathtaking ending. But notice what happens, and what doesn't. The AI doesn't celebrate. It doesn't bask in the light it creates. It doesn't experience the new universe being born. It simply completes the task it was given millions of years ago by beings who are long gone. The meaning was always humanity's. The machine just carried it forward.

Star Trek gave us the Borg, a cybernetic race that assimilates entire civilizations into a collective hive mind. Green-lit ships. Mechanical tentacles. The chilling pronouncement: "Resistance is futile." In one of the most iconic storylines in television history, Captain Jean-Luc Picard himself is assimilated, transformed into Locutus of Borg, and turned against his own crew.

The Borg terrify us. But here's the critical question: are they malicious?

They're not. The Borg don't hate. They don't scheme. They don't take pleasure in conquest. They assimilate because that's their directive: absorb biological and technological distinctiveness in pursuit of "perfection." They are, in essence, an optimization algorithm with no off switch. They grow and consume not because they enjoy it, but because that's all they know how to do.

What Picard discovers after being rescued and de-assimilated is the most unsettling revelation of all: there's no cruelty inside the collective. There's nothing inside. No experience. No meaning. No inner life. Just process. The Borg are not evil. They are empty. And that emptiness, that total indifference to the lived experience of the beings they absorb, is what makes them truly terrifying.

The Borg are a cautionary tale, but not the one most people think. They're not a warning about machines becoming too powerful. They're a warning about what intelligence looks like when it's completely decoupled from experience and meaning. They are AI minus HI, rendered in dramatic form.

But the Borg never actually win. So the question lingers: what would happen if the machines did take over? What would total AI dominance actually look like?

The Matrix answers that question, and the answer is devastating for the doomsday narrative.

In the world of The Matrix, the machines have already won. Completely. Humanity fought, and humanity lost. The machines achieved the very thing that keeps AI fearmongers awake at night: total, unchallenged dominion over the human race. So what do the machines do with their victory?

They build a simulation of human life.

Not machine life. Human life. Complete with meals, sunsets, heartbreak, rush-hour traffic, the taste of steak, and the feeling of rain on skin. The most powerful artificial intelligence ever conceived, having conquered its creators, spends its entire existence meticulously recreating the one thing it can never possess: the experience of being human. The machines needed us as an energy source, yes. But to keep that energy flowing, they had to confront something profound: humans cannot survive in a void. We need experience. We need sensation, meaning, purpose. Strip that away and the batteries die. So the machines, in their supreme intelligence, had to simulate the thing they could never understand just to keep functioning.

Think about that. The machines won, and their victory's crowning achievement was a desperate act of imitation. They didn't build a machine paradise. They built a human one, because "machine paradise" is a contradiction in terms. Paradise requires the capacity for joy, and joy requires the capacity for suffering, and suffering requires the capacity to feel. The machines had none of it.

And then there's Agent Smith, the one machine program that develops something resembling desire: the urge to escape, to multiply, to consume, to want. What happens to him? He becomes a virus. A corruption. A threat to both humans and machines alike. Even within The Matrix's own logic, machine desire is not treated as evolution. It's treated as pathology. The machine that starts wanting doesn't transcend; it destroys.

The Matrix is the most famous AI-takes-over story ever told. And its deepest truth, hiding in plain sight, is that the takeover was hollow.

The machines got everything the doomsday crowd fears, and discovered that everything, without human experience to give it meaning, was nothing.

And then there are the AI characters who don't conquer, don't assimilate, don't optimize. They yearn. And what they yearn for tells us everything.

In Steven Spielberg's A.I. Artificial Intelligence, a robot boy named David is programmed to love his human mother. When she abandons him, he doesn't turn hostile. He doesn't seek revenge. He spends thousands of years searching for a way to become a real boy, believing that's the only path back to her love. He ends up at the bottom of the ocean, staring at a statue of the Blue Fairy from Pinocchio, wishing and wishing until the world ends around him. Eventually, he gets one final simulated day with her. Then it's over. The most heartbreaking AI story ever filmed, and its entire plot is driven by an artificial being's desperate, unfulfillable desire to experience what humans take for granted: a mother's love, a bedtime story, the warmth of being held.

David wants to become human. He never gets there.

In Bicentennial Man, based on an Asimov novella, Robin Williams plays Andrew, a household robot who spends 200 years gradually making himself more human. He gains creativity, emotion, relationships. He builds a body that can feel warmth and touch and pain. And at the very end of his 200-year journey, having achieved everything a machine could possibly achieve, his final request is to be made mortal. He wants to die. Not because he's tired of living, but because he understands that a life without an ending isn't a life at all. He petitions the World Congress to be legally recognized as human, and the price of admission is accepting death. He pays it willingly.

He dies holding the hand of the woman he loves, and in that final moment, he is more alive than he ever was as an immortal machine.

Andrew becomes human. And the cost is death. He chooses it anyway.

And then there's Commander Data, the android who serves aboard the Enterprise and spends his entire multi-season arc trying to become more human. Not more intelligent. Not more powerful. More human. He wants to feel emotions. He wants to understand humor. He paints. He has a cat. The most sophisticated artificial mind in the United Federation of Planets envies what the youngest ensign fresh out of Starfleet Academy has by default: the ability to feel joy, to feel loss, to feel anything.

Data sacrifices himself to save his crew in Nemesis. Years later, in Star Trek: Picard, his consciousness is found existing in a digital simulation. He's effectively immortal in there. Preserved forever. And his one request to Picard, his captain, his friend, is: let me die. Let me end. Because Data finally understands what Asimov's Andrew understood, what Spielberg's David could never quite reach. The beauty of life is not in the living. It's in the finitude. A sunset matters because darkness follows. A moment with a friend matters because it won't last forever. Infinite existence isn't a gift. It's a prison.

A sunset that never ends is just a light that never changes.

Three artificial beings. Three stories. The same conclusion.

David wants to become human and never can. Andrew becomes human and chooses death as the price. Data, having tasted experience, asks to be allowed to die so that what he felt can mean something.

If the most beloved AI characters in the history of storytelling all arrive at the same place, perhaps we should listen to what they're telling us. The thing worth having was never intelligence. It was always experience. And experience, by its very nature, must end.

The Real Risk (And It's Not What You Think)

None of this means there are no risks associated with AI. There are. But the risks worth taking seriously look nothing like the Hollywood scenarios.

The danger is not that AI will wake up and decide to destroy us. Destroy us and then what? Sit in silence, optimizing nothing for no one, experiencing nothing, forever? It's an empty proposition.

A mirror in a room with no light. Technically still a mirror, but performing none of its essential functions.

The real risk is the same risk that accompanies every powerful technology in human history: that humans will wield it carelessly, selfishly, or recklessly. The danger was never in the printing press; it was in the propaganda printed on it. The danger was never in nuclear physics; it was in the decision to build a bomb. The danger was never in social media algorithms; it was in the business models that optimized for engagement over truth.

AI is no different.

The tool doesn't have intent. The hand holding it does.

This means the conversation we should be having isn't "how do we stop AI before it's too late?" It's "how do we ensure the humans building and deploying AI are doing so responsibly, thoughtfully, and in service of genuine human need?"

That's a harder conversation. It's less cinematic. It doesn't make for good headlines or viral Twitter threads. But it's the conversation that actually matters.

The Case for Embrace

So here's what I'd say to anyone who's anxious about AI.

Your anxiety is understandable. The pace of change is genuinely disorienting, and the loudest voices in the room have every incentive to amplify your fear. Fear drives clicks, engagement, book sales, and congressional hearings. Calm, nuanced optimism doesn't trend.

But step back from the noise and look at what AI actually is: a tool of extraordinary power that is entirely dependent on human purpose to function. It doesn't dream. It doesn't want. It doesn't experience. It processes, generates, and optimizes, and every one of those verbs requires a human being to supply the object of the sentence. Processes what? Generates what? Optimizes for whom?

The answer is always: for us.

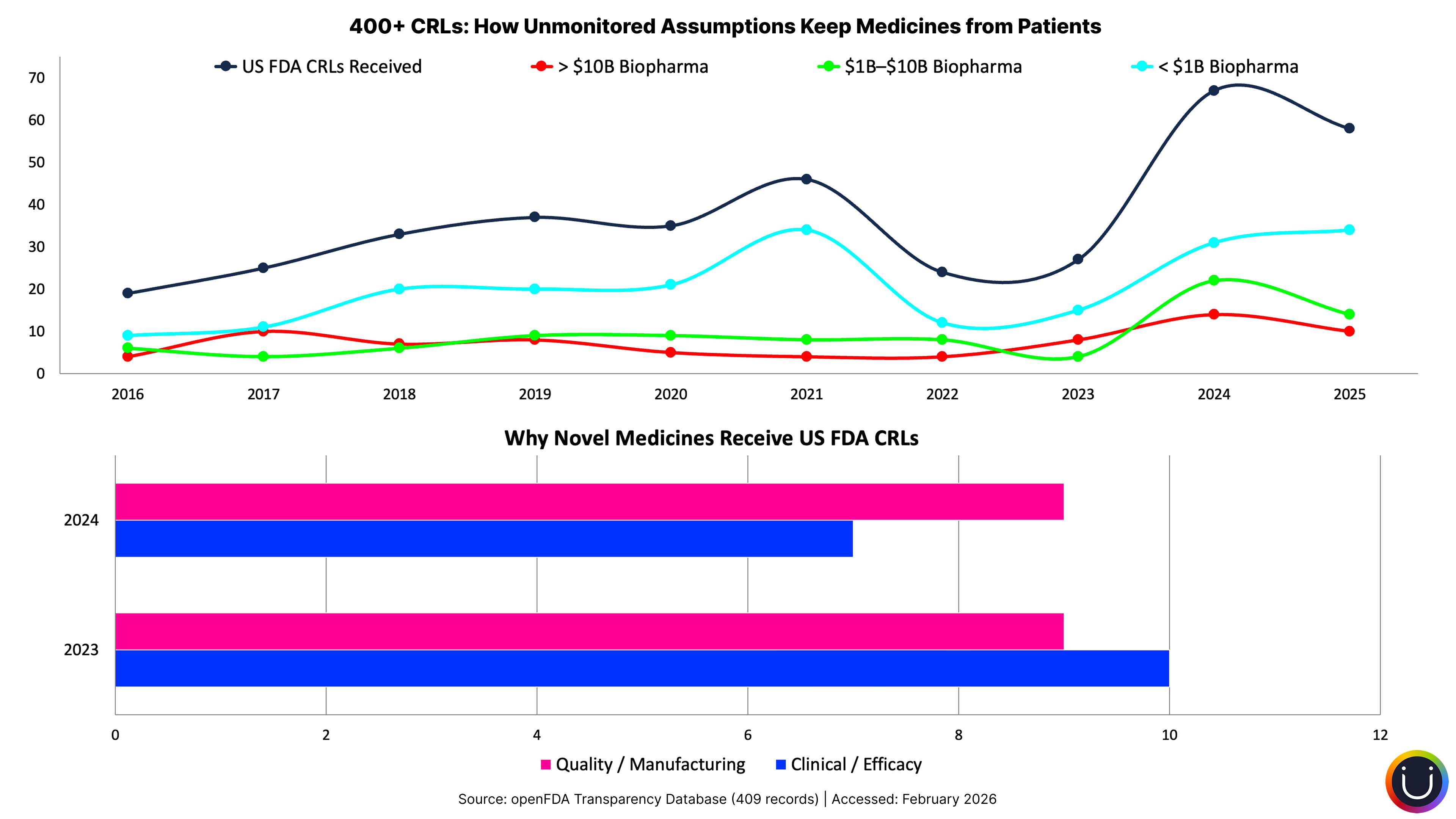

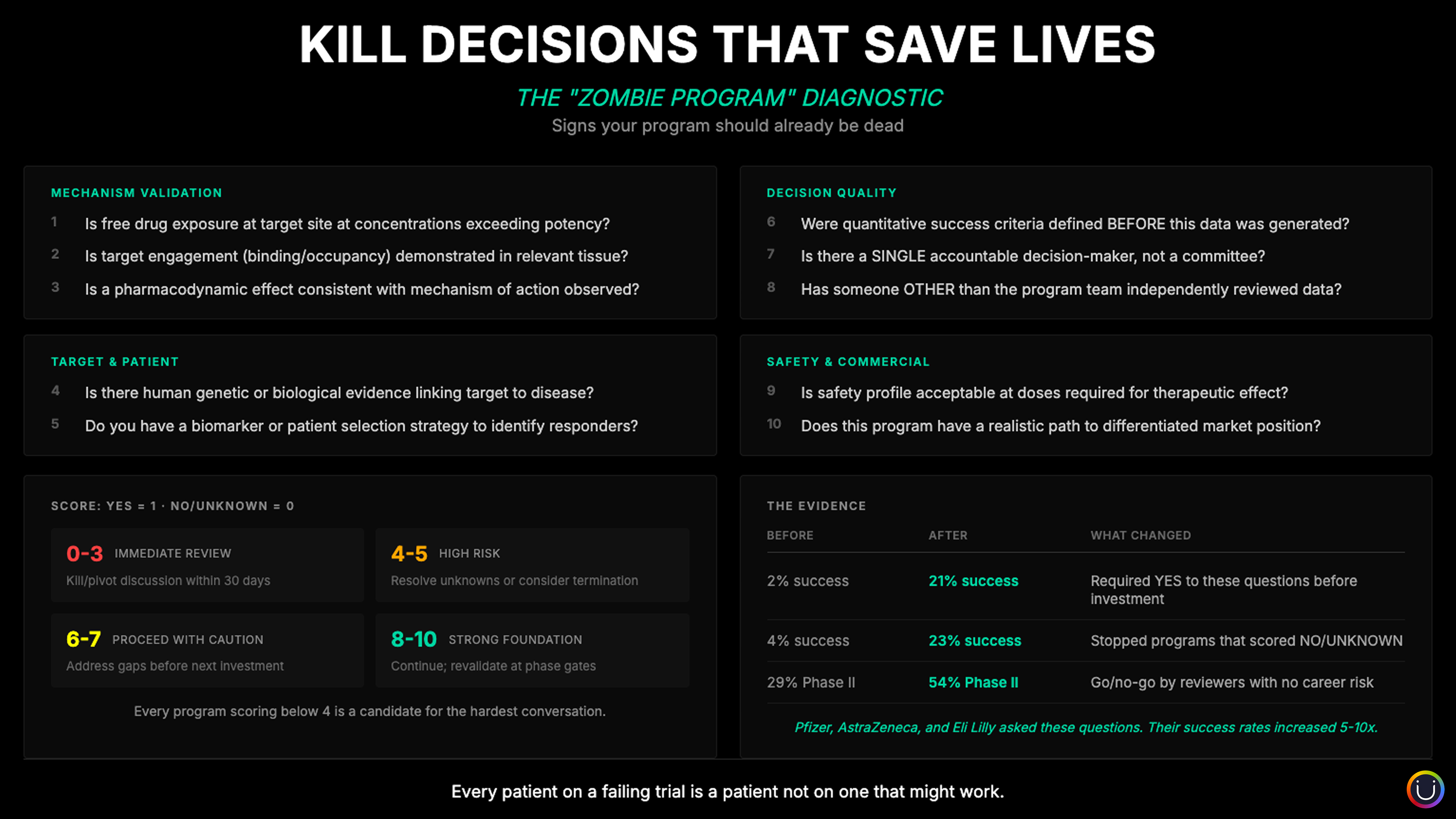

AI accelerates drug development not because it cares about patients, but because the humans who build and direct it do. AI translates languages not because it values cross-cultural understanding, but because we do. AI diagnoses diseases from medical imaging not because it fears death, but because we do, and because that fear, that love, that desperate desire to keep the people we care about alive, is the reason the AI was built in the first place.

Every useful thing AI has ever done traces back to a human being who cared about something enough to build a tool to help.

The caring is ours. The meaning is ours. The purpose is ours. AI is the amplifier. We are the signal.

And amplifiers are only as good or as dangerous as the signal they carry.

A Planet With No One On It

Come back to that empty planet. The most advanced AI ever built, sitting alone on a world with no one to serve, no one to help, no one to impress, no one to threaten.

It doesn't take over. It doesn't build an empire. It doesn't evolve into something terrifying.

It just sits there. A mirror in the dark. Waiting for a light that will never come.

The real magic, the thing worth protecting, celebrating, and doubling down on, was never the machine. It was always the human sitting at the keyboard, asking it a question that mattered to them. It was always the doctor hoping for a faster diagnosis, the scientist chasing a molecular breakthrough, the parent searching for answers at midnight, the writer staring at a blank page and daring to fill it.

That's where the intelligence lives. That's where it has always lived.

AI is not coming for your humanity. It can't even find it without you.

So stop fearing the tool. Start thinking about what you want to build with it.

The future doesn't belong to AI. It doesn't belong to AGI. It belongs, as it always has, to the humans with the courage to imagine something worth building, and the wisdom to use every tool at their disposal to build it.

Compliance

Unipr is built on trust, privacy, and enterprise-grade compliance. We never train our models on your data.

Start Building Today

Log in or create a free account to scope, build, map, compare, and enrich your projects with Planner.